Use cases

Decrease upstream traffic

When multiple concurrent requests for the same object hit an origin shield cache at the same time, the origin shield must communicate efficiently with Unified Origin to reduce the number of requests going upstream.

An origin shield cache uses different techniques to reduce the requests going to upstream servers, one of the most popular is the use of request collapsing.

Request collapsing

This type of method minimizes the load at Unified Origin by using an origin shield cache that collapses more than one request with the same object's URI into one single request, and wait for its response to potentially reply requests that initially requested the same object. In most popular web servers request collapsing can be enabled by using cache locking or by proving a stale content to the client while revalidating the content from Unified Origin.

The following figure illustrates the cache stampede or thundering herd problem which can occur when multiple concurrent requests of the same object's URI hit an HTTP proxy. If not configured correctly, the HTTP proxy server will forward all the requests to the origin server which can generate a high load and potentially an increase the latency towards media players.

---------- -----------------

[ Unified ] <-- [ HTTP Proxy ] <-- client request 1

[ Origin ] <-- [ server ] <-- .

[ ] <-- [ ] <-- .

[ ] <-- [ ] <-- .

[ ] <-- [ ] <-- .

[ ] <-- [ ] <-- client request N

---------- -----------------

After applying a technique such as request collapsing we can reduce the load hitting Unified Origin.

---------- -----------------

[ Unified ] [ Origin shield ] <-- client request 1

[ Origin ] [ cache ] <-- .

[ ] <-- [ ] <-- .

[ ] [ ] <-- .

[ ] [ ] <-- .

[ ] [ ] <-- client request N

---------- ----------------

Note

Currently, Apache web server's module mod_cache (with the CacheLock on

directive) does not support request collapsing for new cache entries. Enabling

CacheLock on will only activate requests collapsing for stale (previously

cached) objects. Therefore, we recommend using Nginx or Varnish Cache for the

implementation of an Origin shield cache. See also hint.

HTTP response headers

Reduce latency at the cache layer

New in version 1.14.3.

Cache-Control header was introduced in RFC 7234 in 2008, which focused

on storing static objects in the cache and primarily focused on HTML files.

Servers would read these HTTP response headers and store these objects in

memory for a determinate number of seconds until they expired

(TTL). This approach would work great in browsers where latencies were less

critical.

Media delivery over HTTP has evolved since then, but also in demand of lower latencies for scenarios such as live streaming, where media can be produced in smaller periods than a second precision.

Usp-Cache-Control: max-age-ms="..." enables media distributors (e.g., CDN, edge caches, etc.) to store media objects with a millisecond precision and reduce media distribution latency.

Media playlist compression

Most modern media players can request a compressed media playlist from a origin

shield cache or a CDN. For example, a media player (if supported) will generate

an HTTP request with an Accept-Encoding: gzip header, indicating to the

origin shield cache that the player can accept gzip compression for that file.

The compression of media playlist can be carried out by two different methods, depending on your use case and requirements:

Unified Origin provides a compressed media playlist to the Origin shield cache. This behavior is based on the value of the incoming request

Accept-Encodingheader.Unified Origin only replies with uncompressed media playlists and the origin shield cache is in charge of compressing these files accordingly.

Note

In this document we will explain using the first approach.

Output formats |

Media type |

Extension |

Content-Type |

|---|---|---|---|

MPEG-DASH |

Manifest |

mpd |

application/dash+xml |

HTTP Live Streaming (HLS) |

Media Playlist |

m3u8 |

application/vnd.apple.mpegurl |

HTTP Smooth Streaming (HSS) |

Manifest |

NA |

text/xml |

HTTP Dynamic Streaming (HDS) |

Manifest |

f4m |

application/f4m+xml |

Note

Unified Origin will only generate a strong validator of the ETag header. In contrast, an Origin shield cache such as Nginx proxy or Varnish Cache will generate a weak ETag validator after applying compression (e.g., gzip) to the HTTP response. A weak validator of the ETag header is recognized by ETag: W/"${VALUE}".

Vary header

The Vary header is a powerful HTTP header that can help an Origin shield cache to save different variations of an cached object. This approach can be quite useful when operators have control of the player-side requests going towards the cache shield layer. The Vary header can use responses to indicate that the response can only be used for requests with specific header values.

ETag header:

Care must be taken when caching content by an Origin shield cache or a CDN. In VoD and Live streaming Unified Origin will provides always a strong ETag header response value. In Live streaming Unified Origin will set Expires header which Origin shield cache can use to cache the object. Therefore, we encourage the caching of objects at the Origin Shield cache or CDN by using the Expires header, and the ETag response value in combination with the request header If-None-Match.

The following shows the syntax format to generate a request to Origin by the Origin shield cache or/and the CDN.

#!/bin/bash

If-None-Match: "<etag_value>"

If-None-Match: "<etag_value>", "<etag_value>", …

Prefetching of content

This method reduces the latency between an origin shield cache and downstream

servers by warming up the cache. It requests in advance the next available media

object to the upstream server before the downstream server requests this object.

This will reduce the probability of having MISS requests by origin shield

caches.

Unified Origin provides the Link header as Prefetch Headers to increase

the cache hit ratio up to 25%, depending on your configuration.

Handle upstream server's limited lifetime

For the Live video streaming cases only, Unified Origin provides the HTTP Sunset

response header in media segments. The Sunset header is not concerned with

resource state at all. It only indicates that the media segment is expected to

become unavailable at specific point in time. More details are explained in the

Sunset header section of our documents or [RFC8594].

Note

Unified Origin will not write Cache-Control or Expires headers

if the Sunset header is present. However, for

exception of HTTP Smooth Streaming (HSS) output format Unified Origin

will write Expires and Sunset Header.

Master playlist in HLS streaming

Unified Origin provides Cache-Control and Expires response headers based on

the type of video streaming case: VoD or Live. In Live use cases no

Cache-Control headers are set for HLS Master Playlists. Therefore, you may

cache this "static" file at the origin shield cache for the duration of your

event or based on your use case and requirements. We discuss in more detail the

behavior of these response headers in Expires and Cache-Control: max-age="..." section.

Note

In Live video streaming, ensure that the encoder and Unified Origin have started running before announcing the URIs to media players. This can reduce the chances of caching (at the CDN or Origin Shield cache) a Master Playlist with fewer media tracks than the ones the encoder is pushing.

Handling Live to VoD

In Live streaming use cases, an encoder may signal Unified Origin when the Live

streaming event ends. After this point the Live Media Presentation (in

MPEG-DASH) and the Media Playlists (in HLS) will switch to a VoD presentation.

Therefore, Unified Origin will no longer generate Cache-Control headers. In case

you need to cache the media for longer time after the Live event has finished,

you will need to generate new caching rules to keep the objects in cache for

longer periods than the Cache-Control headers generated during the Live

event.

HTTP response codes

In many cases, intermediate servers can generate invalid requests to origin shield caches that were initialized by misbehaving media players or by DDoS attacks. This type of behavior can create an increase of load at Unified Origin if the origin shield does not appropriately cache the error status codes.

Origin shield caches should act upon the type of status error and response headers by the upstream server. For example, by actively tracking the clients' requests errors, the origin shield cache should automatically route each request to a secondary origin shield cache location if the initial origin shield cache is unavailable; otherwise, the origin shield cache should save in cache the request and reply with the response.

By default the list of status codes defined as cacheable in [RFC7231] [1] are the following: 200, 203, 204, 206, 300, 301, 404, 405, 410, 414, and 501. A cache server can reuse these status codes with heuristic expiration unless otherwise indicated by the method definition or explicit cache controls in HTTP/1.1 [RFC7234] [2]; all other status codes are not cacheable by default.

For both Live and VoD streaming cases, an origin shield cache should respect the

Cache-Control and Expires headers generated by Unified Origin. Status codes

other than 200 and 206 may be cached for a small period (Time to Live (TTL)) to

reduce the load hitting Unified Origin.

Error caching

In video delivery use cases such as Live or VoD2Live, a new media segment is available within a specific time. Therefore, sometimes media players can miss behave by requesting media segments that are not yet available, and consequently producing a 4XX status codes. To mitigate the increase of load at Unified Origin is recommended short TTLs for objects in cache to at least one second and a maximum of half the media segment duration.

Note

More information about the error code for each output format and be found in Custom HTTP Status codes (Apache) section.

Content aware key caching

A cache key is a unique identifier of an object stored in cache. An origin shield cache may fail to locate an object in cache (miss request) if the cached object's key does not match the requested full URI (including query strings). For example, if the query string in the URI has different keys, values, or even order of those key-value pairs, it will be considered a separate cache key. Consequently, the origin shield cache will unnecessarily forward the request to Unified Origin.

For example, if we do not take in consideration query strings when generating cache keys of URIs A, B, and C, the origin shield cache will see these object in cache as the same object. In contrast, if we take into consideration the query string and query parameters when generating the cache key, the URIs A, B, and C will be seen as three different cached objects. Therefore, having more granularity in the generation of cache keys will increase the number of objects stored in cache.

(A): http://example.com

(B): http://example.com?asdf=asdf

(C): http://example.com?asdf=zxcv

Note

Unified Origin supports query string parameters in Using dynamic track selection and for the creation of Virtual subclips when requesting the media presentation.

In addition to using full URI (including query strings) to generate cache keys,

an origin shield cache can take into consideration HTTP request/response

headers. In previous sections, we discussed the use of Vary header which

indicates to the origin shield cache about the variations of certain headers and

it may store more than one object with the same URI. There are more advanced

methods for storing objects in cache, such as recency-based, frequency-based,

size-based, or a combination of these methods. However, these last methods are

out of the scope of this document.

Mitigate malicious requests

An origin shield cache may be overloaded by many requests that aim to destabilize a media streaming service. This type of requests behavior can be considered as a denial-of-service attack (DoS attack). If the origin shield cache has not been configured for blocking these types of requests, the origin shield cache will forward all requests to the upstream server (e.g., Unified Origin). The requests hitting the upstream server can potentially cause a service downtime and introduce latency toward end users.

There are many methods for mitigating malicious requests in modern web servers (e.g., [3]). Some of these methods are listed below. However, in this document we will only discuss the first three methods and consider the rest out of scope of this document.

URL signing

Stop invalid requests

Request rate limiting

Connections limiting

Closing slow connections

Denylisting IP Addresses

Allowing IP Addresses

Blocking requests

Limiting connections to backend server (e.g., origin servers)

URL signing

This method provides limited permission to undesired requests trying to hit Unified Origin. The origin shield cache is in charge of verifying the signature from the incoming request and deciding if it needs to forward to Unified Origin or block it. This method provides advantages for users without credentials and prevents users from requesting certain content. URL signing is an authentication method based on a URL's query string parameters; however, it can also use the incoming request header as part of the verification.

For example, in Live media streaming use cases, media players may misbehave by

requesting indiscriminately different subclips of the Media Presentation, which

can introduce latency if not handled it properly. Unified Origin supports a query

string parameter vbegin. The generation of these undesired requests can

overload Unified Origin. Therefore, a simple approach would be to generate a rule in

the origin shield cache that can block all requests that do not contain the

specific value to query key vbegin. More information about supported query

strings in Unified Origin such as vbegin is explained in Virtual subclips

section.

Request rate limiting

This method stops undesired incoming requests over a certain window to mitigate DDoS attacks. Commonly, rate limiting is implemented in modern web servers to limit web scrapers from stealing content and block login attempts by brute force. Nevertheless, using only one method such as rate limiting may not be enough for preventing DDoS attacks, but the combination of multiple methods can.

Limiting the requests of suspicious media players at the origin shield cache can

improve performance at Unified Origin and all your media workflow. The most common

example of using request rate limiting is the use of X-Forward-For header

that will help you identify the trusted IPs from legit media player or CDNs.

The following configuration provides an example of how to implement previous methods to mitigate DDoS attacks.

Cache invalidation/purge

Cache invalidation in origin shield caches and CDNs has become paramount for media delivery workflows. For example, no OTT service wants to offer viewers old versions of their media content or not be able to remove publicly-available content for a specific reason (e.g., copyrights).

HTTP proxy servers such as Nginx and Varnish Cache, or CDNs such as Akamai, Fastly or Lumen support the following methods for cache invalidation (for other CDNs as for instance Limelight Networks please refer to the appropriate documentation). The following techniques can help improve your media workflow content distribution or even update versions of our software with the lowest risk possible.

TTL approach

The most common method to refresh or replace content is to set a TTL (time-to-live) in cache for objects. The TTL is set with max-age type response headers, see HTTP Response Headers for an outline of available headers. Typically a CDN provides an API to set them, see for instance the interface offered by Lumen.

Failover approach

For something like a library transcode or migration - an option is to use failover in the CDN, here a CDN side rule would set up to fill from the new library path first, then if that returns a 4xx or 5xx fill from the old path.

E.g. if you had http://example.com/published/somevideo/foo.mp4 in your CMS with 'example.com' routing to the CDN, the rule would try http://new-origin.example.com/$PATH and serve that if it filled, were it to return a 404 the CDN would try http://old-origin.example.com/$PATH.

Purge approach

CDNs also offer ways to actively purge caches.

Akamai for instance offers a purge API (please reference their purge-methods section as well) whereas Lumen offers Content Invalidation, both can be scripted or automated using CI tooling.

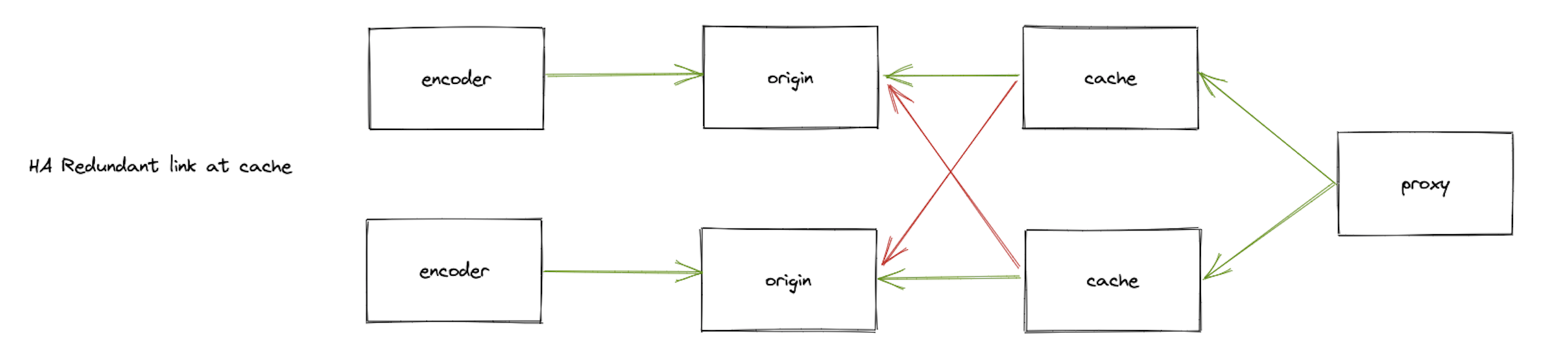

Redundant origin shield cache

In media delivery high availability becomes paramount. The use of redundant link at the cache layer is a method to prevent the crash of a video stream. The following image is an example of a redundant link at the cache layer.

https://www.nginx.com/blog/mitigating-ddos-attacks-with-nginx-and-nginx-plus/